Keycloak as an OIDC provider for Kubernetes

The workflow

- The client requests an ID Token with claims for it’s identity (name) and the groups he/she belongs to

- The client then requests access to Kubernetes providing the ID token from the IDP obtained previously

- This token (which contains the claims for name, group ) is used in each request to the API Server

- The API Server in turn checks the ID Token validity with the ID provider

- If the token is valid, then the API Server will check if the request is authorized based on the token’s claims and the configured RBAC (by matching it with the corresponding resources)

- Finally, the actions will be performed or denied

- A response is sent back to the client

From the user perspective, once everything is setup, we will perform this actions to obtain access to the cluster:

- Get an ID Token (and Refresh token) from the ID provider (we will request the tokens from Keycloak).

- Set a user’s credentials for kubectl .

- Set a new kubectl config with this user and a configured cluster (for example, minikube )

- Done: Use the config, issue commands

From the Keycloak admin’s perspective, we will:

- Create a client (in our example, a public client, i.e.: no client secret)

- Create some basic claims for identification and management of users and groups, specifically:

- name

- groups

- Place the target users within the corresponding group

From the Kubernetes admin’s perspective, we will:

- Configure the required RBAC resources: for example, a ClusterRole with the permitted operations and a ClusterRoleBinding that matches the desired group.

- Configure the API Server to use Keycloak as an OIDC provider

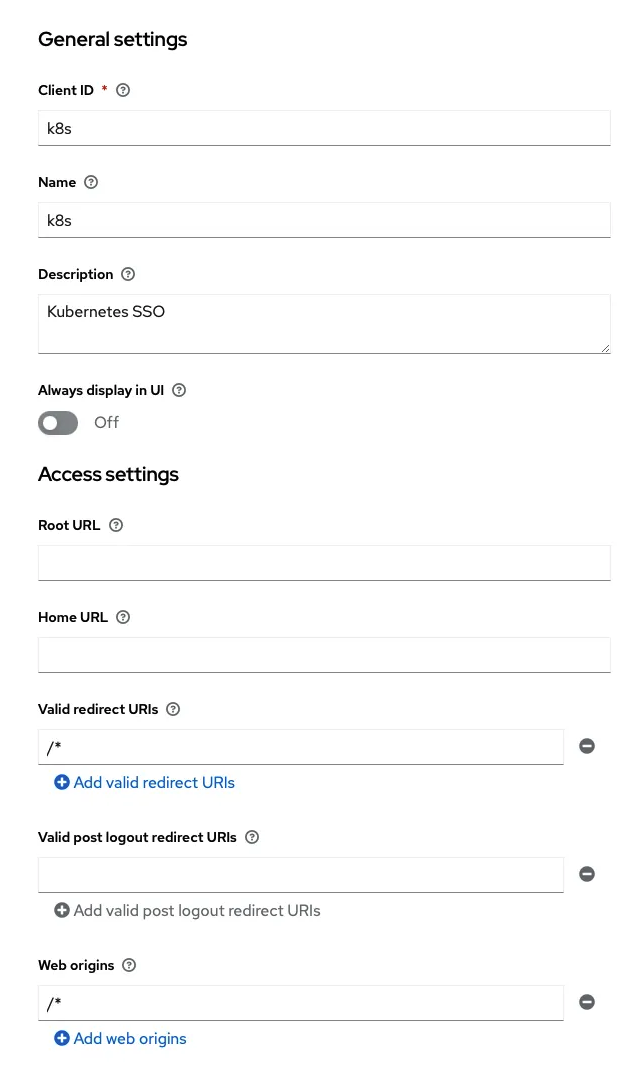

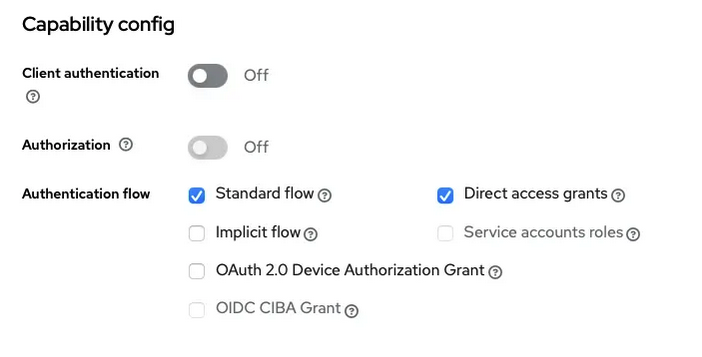

Configure a Keycloak client for Kubernetes SSO

| |

- I am allowing all redirects and all web origins, though this is less than desirable in production.

- Please change this values to your redirect URLs to enhance the security.

- I have let only the Standard Flow and the Direct access (for username and password sing-in).

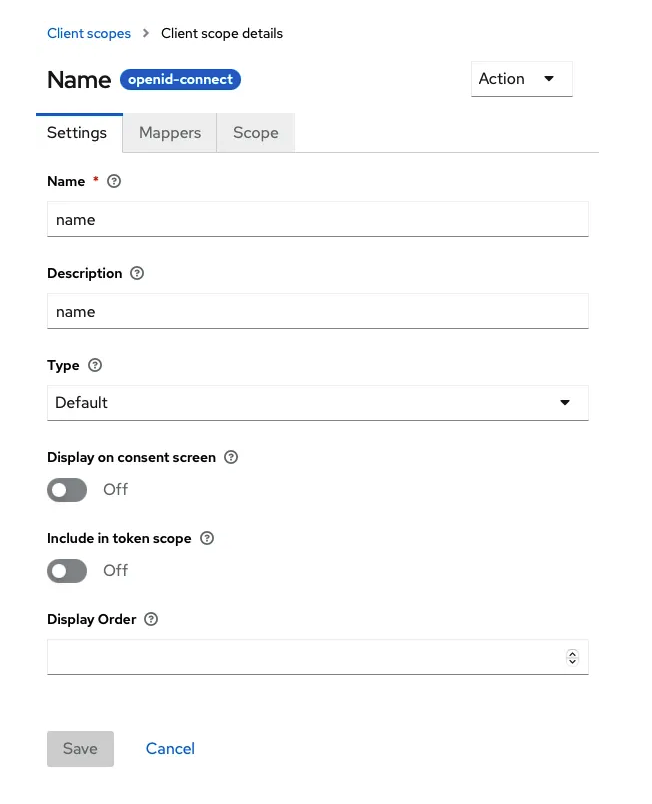

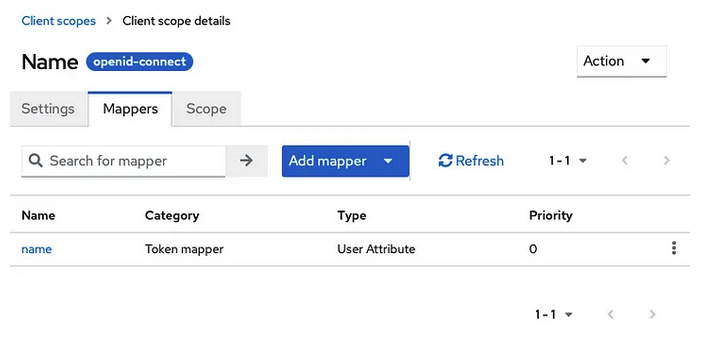

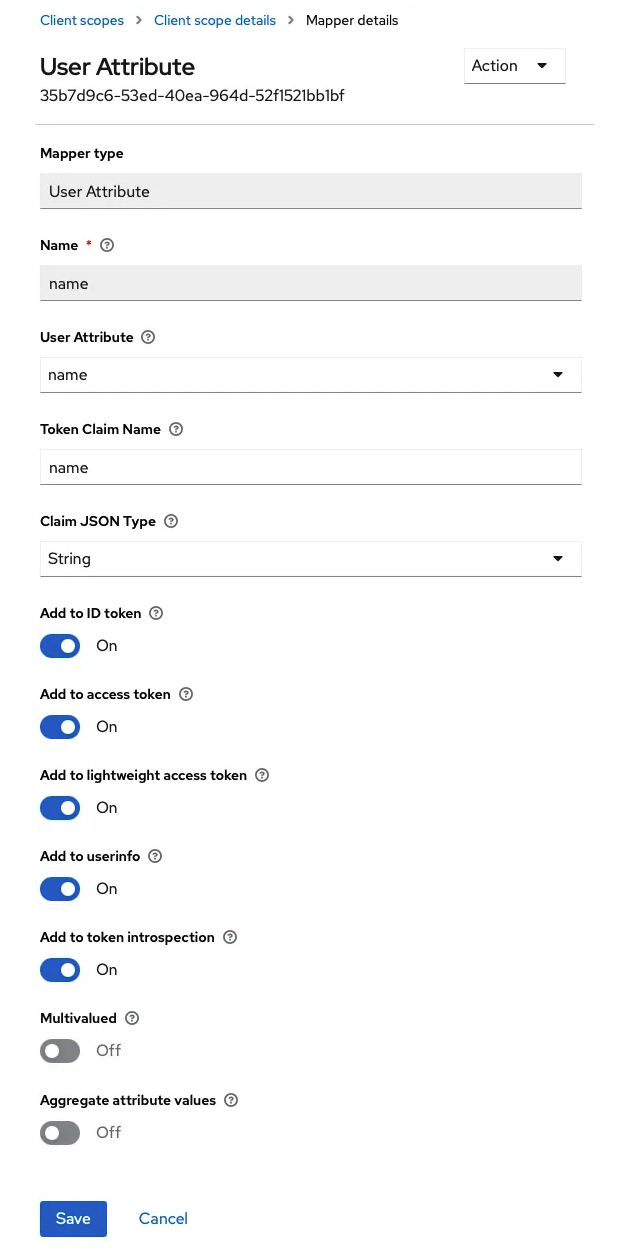

Configuring the name and groups claims

We want to make those two claims available in the ID token. For that we will:

- Create new client scopes and

- Specify how to map them to the token

- Configure those new scopes in the Keycloak client that we created for Kubernetes authentication

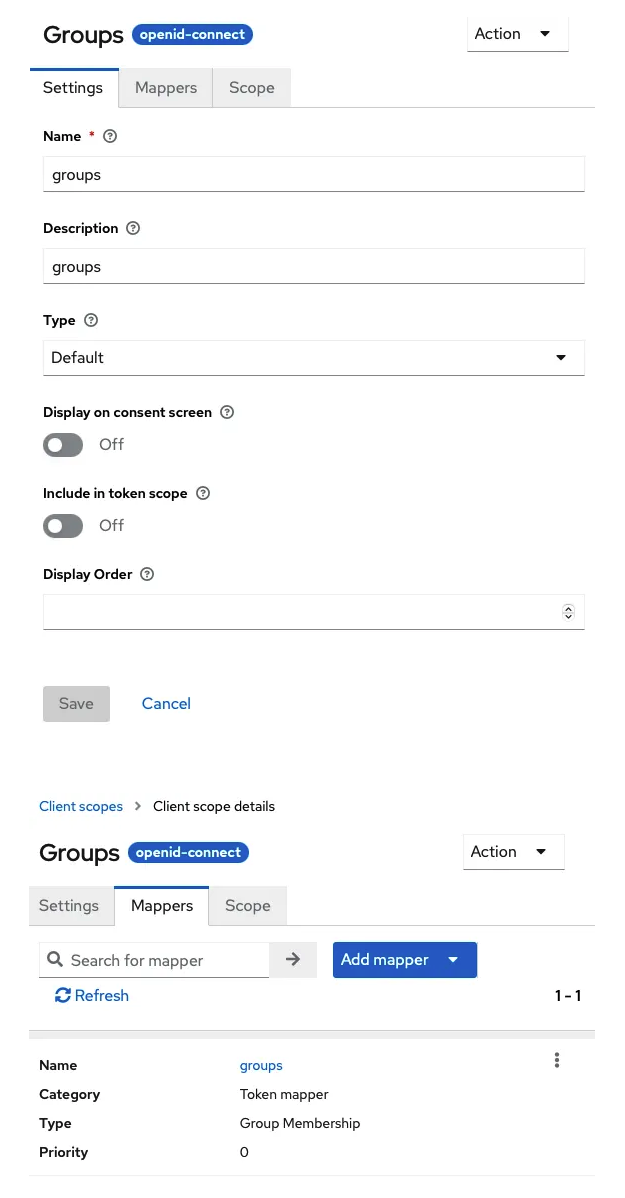

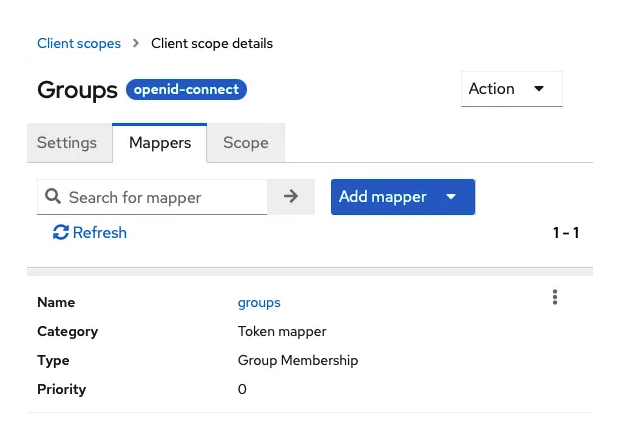

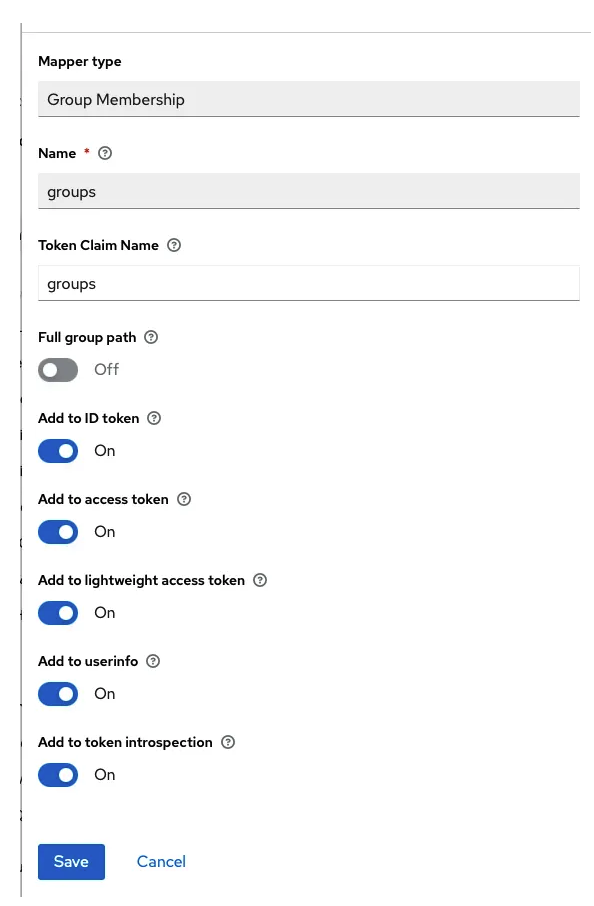

The group scope:

Gotchas

- Forgetting to strip the path from the groups’s name

- Forgetting to add this to the ID Token!

- Forgetting to add this to the user info (if you plan to validate the token before submitting it in each request)

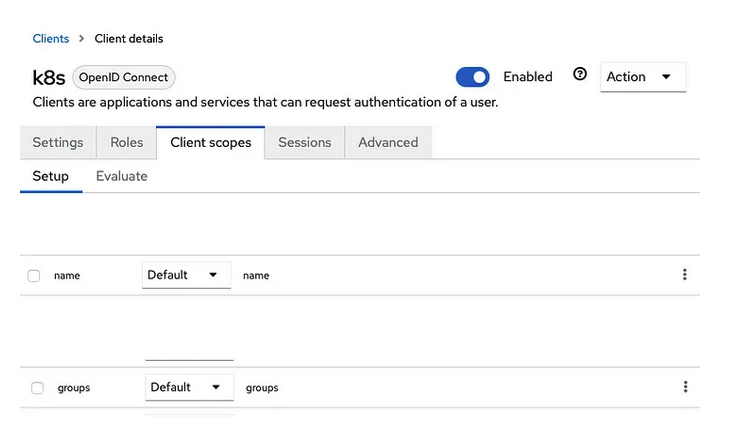

The “Kubernetes” Client, Client Scopes configuration:

- Add the client scopes name and groups

- Make them Default so that’s easier later on

Common CLI environment variables

To make it easier to go through the rest of the steps we will use some environment variables:

| |

K8S: Configure Kubernetes API Server

We will setup minikube passing the configuration flags for the API Server directly from the command line.

| |

Command Line breakdown

— embed-certs : adds the certificates placed under $HOME/.minikube/certs/ — extra-config=apiserver.authorization-mode=Node,RBAC : this adds RBAC to the cluster while maintaining the local node access (to prevent lockout). — extra-config=apiserver.oidc-issuer-url=${OIDC_ISSUER_URL} : the issuer URL (which is the URL with the realm, or in case of another OpenID provider, the URL where you can then find the .well-known/openid-configuration — extra-config=apiserver.oidc-client-id=${OIDC_CLIENT_ID} : the client ID (in our case, the name of the client in the real) — extra-config=apiserver.oidc-username-claim=name : the username claim from the token. — extra-config=apiserver.oidc-username-prefix=- : intentionally configured with a single dash, which prevents any prefix to be appended to the name- — extra-config=apiserver.oidc-groups-claim=groups : the configuration for the group name. — extra-config=apiserver.oidc-groups-prefix= : this one is intentionally left blank to prevent the API Server to add any prefix (like - to the groups’ names)

Gotchas

The config is not yet ready or you have not configured an alternative to RBAC

The certificate is not trusted (because it is self-signed or it is not available in the trust store)

K8S: Configure RBAC

For now, we will create a ClusterRole and a ClusterRoleBinding to demonstrate how to create how to grant “ReadOnly” rights to Namespaces and Pods within the cluster:

| |

How does this work?

The ClusterRole defines which operations can be performed on the API Server and the ClusterRoleBinding matches the role with a specific subject: notice the subjects code block:

It references the name k8s of kind Group

By assigning this group (“k8s”) to our users they will get RO access to the clusters’ namespaces and pods.

Apply the config using the current local node credentials:

kubectl apply -f rbac.yaml Configure the Client (kubectl)

As explained in the introductory section, we will first obtain an ID token and then configure kubectl with it.

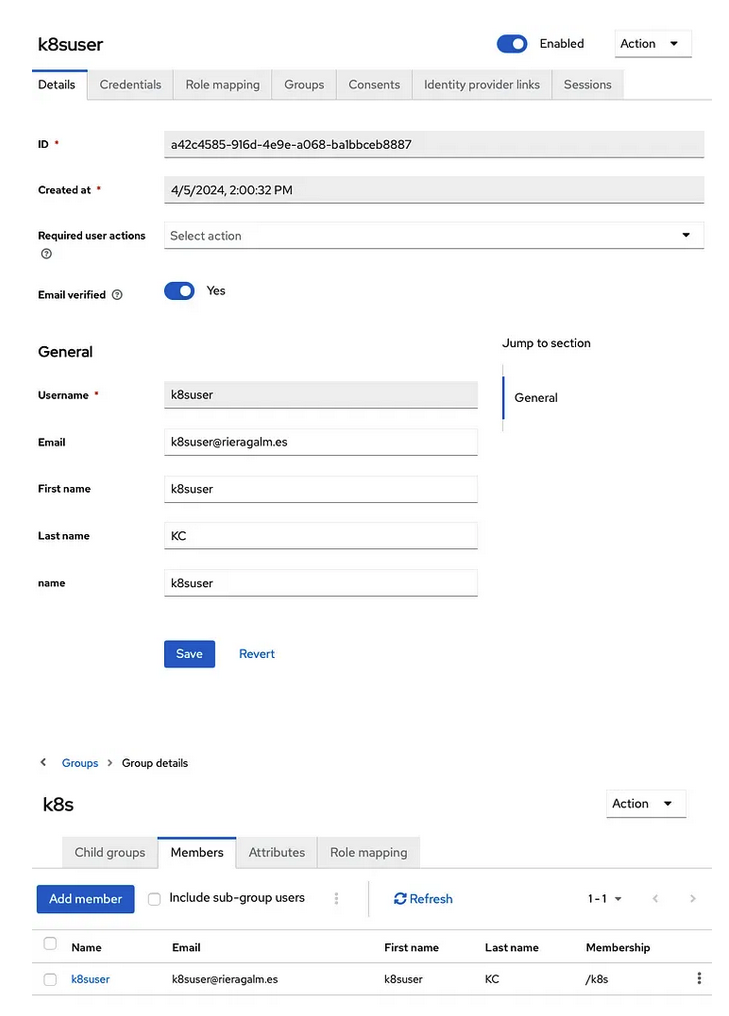

For this purpose, I will create a user called k8suser in Keycloak and assign it the group k8s . You can skip this part if you have other users and or groups, just adjust to your needs:

Preparing the command line to request the ID Token

For this purpuse I will use curl and jq to get a response from Keycloak.

I will then extract the tokens and use them to configure kubectl .

| |

| |

Extract the tokens:

| |

Now we can optionally check if we received all the information that we need by querying the user info endpoint with the access token.

Afterwards we do not need the access token anymore and we could discard it.

| |

Note: Check that name and groups appear and they are populated properly!

Gotchas

You forgot to make the claims default or you are not requesting those scopes, so there are not available in the ID Token

Kubectl: create a set of credentials

Now that we have the tokens, let’s create a new set of kubectl credentials!

| |

Now we have to create a new configuration context to use this newly created user in an existing cluster:

| |

And finally we need to use it:

| |

Kubectl: check the access

We will now check our identity and we will try to perform a get operation to get the pods on the cluster:

| |

| |

| |